Drug Safety Signal Detector Simulator

How It Works

This simulator demonstrates how AI detects drug safety signals compared to traditional reporting. Enter hypothetical drug usage data to see:

- AI System Detects signals from 100% of data sources

- Traditional Detects only 5% of adverse events

- NLP Power Analyzes unstructured data like social media and notes

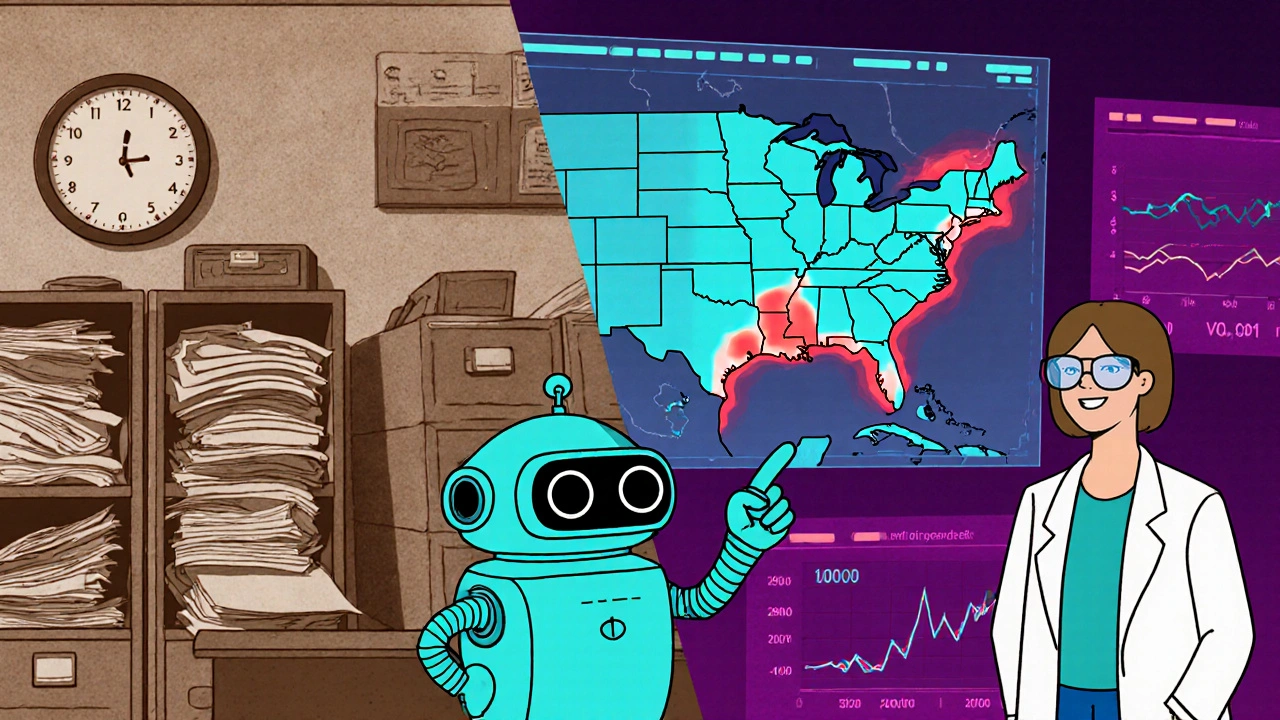

Every year, thousands of people suffer serious harm from medications that seemed safe when approved. Most of these cases go unnoticed until it’s too late - because traditional drug safety systems rely on doctors and patients to report problems manually. That’s slow, incomplete, and often misses the early warning signs. But now, artificial intelligence is changing that. AI doesn’t wait for reports. It scans millions of data points every hour - from hospital records and social media posts to pharmacy claims and clinical notes - looking for patterns humans would never see. This isn’t science fiction. It’s happening right now, and it’s saving lives.

How AI Finds Hidden Drug Risks

Before AI, drug safety teams reviewed maybe 5% of all adverse event reports. The rest? Filed away, ignored, or lost in piles of paperwork. Now, machine learning models process 100% of incoming data. These systems don’t just count complaints. They connect dots across sources. A patient in Ohio posts about dizziness after taking a new blood pressure pill. A week later, a nurse in Texas notes the same symptom in an electronic health record. Three days after that, a pharmacy in Florida flags a spike in refills for anti-nausea meds prescribed alongside that same drug. AI links these events automatically - even if they’re written in different languages, coded differently, or buried in free-text notes.

Natural Language Processing (NLP) is the engine behind this. It reads physician notes, patient forums, and even handwritten charts. A 2025 study by Lifebit.ai showed NLP tools extract safety signals from unstructured text with 89.7% accuracy. That means AI can spot a rare side effect - like sudden liver damage from a new diabetes drug - before it shows up in official reports. In one case, a GlaxoSmithKline AI system detected a dangerous interaction between a new anticoagulant and a common antifungal within three weeks of launch. Without AI, that signal might have taken six months to surface. By then, hundreds could have been hurt.

The Systems Powering Real-Time Safety

The U.S. Food and Drug Administration launched its Emerging Drug Safety Technology Program in 2023 to push AI adoption. At the core is the FDA’s Sentinel System - a network that pulls data from over 300 million patient records across hospitals, insurers, and clinics. Since going live, it’s run more than 250 safety analyses. One of its biggest wins? Detecting safety issues with 17 new drugs within six months of approval - something manual review could never do.

But it’s not just government systems. Companies like IQVIA and Lifebit are building AI platforms that scan social media, claims databases, and genomic records. Lifebit processes 1.2 million patient records daily for 14 major drugmakers. Their models use federated learning - meaning they analyze data where it lives (like in a hospital’s server) without moving it. That keeps patient privacy intact while still finding patterns across borders and systems.

These systems don’t work alone. They combine supervised learning (trained on past adverse events), clustering (grouping similar symptoms), and reinforcement learning (improving over time based on feedback). The result? A safety net that gets smarter every day.

Why Traditional Methods Fall Short

Before AI, drug safety was reactive. You waited for doctors to file reports. You waited for patients to call in. You waited for regulators to spot trends. The process took weeks - sometimes months - to confirm a signal. And even then, you only saw what was reported. Studies show that up to 90% of adverse drug reactions go unreported. Why? Because patients don’t know what to report. Doctors are overworked. Paper forms get lost.

AI fixes that by being always on. It doesn’t sleep. It doesn’t miss a note. It doesn’t ignore a tweet. In fact, AI now captures 12-15% of adverse events that were completely invisible before - like patients complaining on Reddit about a rash after taking a new antidepressant. Those posts used to vanish into the noise. Now, they trigger alerts.

But AI isn’t perfect. It’s only as good as the data it’s trained on. If a drug was mostly tested on middle-aged white men, the AI might miss side effects that show up in older women, people of color, or those with chronic conditions. A 2025 Frontiers analysis found that AI systems failed to detect safety signals in low-income communities because their health records were incomplete. That’s not a flaw in the code - it’s a flaw in the system. And it’s a problem the industry is finally starting to address.

Where AI Still Needs Humans

AI can find signals. But it can’t prove cause. Just because a patient took a drug and then had a seizure doesn’t mean the drug caused it. Maybe they had a genetic condition. Maybe they drank alcohol. Maybe they were stressed. That’s where pharmacovigilance experts come in. Human reviewers still assess causality - the real question: “Did this drug cause this harm?”

AI gives them the list. Humans decide what matters. The European Medicines Agency made this clear in March 2025: AI tools must have human oversight. No automation of final decisions. No black boxes. Every alert must be traceable. That’s why top companies now invest in explainable AI (XAI). These systems don’t just say “risk detected.” They show why: “This signal appeared in 87 patients who took Drug X and Drug Y together. 92% of them had no prior history of seizures. The pattern emerged within 14 days of co-administration.”

Dr. A. Nagar, whose 2025 paper was cited six times, put it simply: “AI finds the needle. The human knows which needle matters.”

What It Takes to Implement AI in Drug Safety

Getting AI up and running isn’t plug-and-play. It takes time, money, and training. According to a 2025 survey of 147 pharmacovigilance managers, 78% saw at least a 40% drop in case processing time after AI adoption. But 52% struggled with integration. Many old safety databases - some dating back to the 1990s - weren’t built to talk to AI tools. Fixing that took an average of 7.3 months.

Training is another hurdle. Pharmacovigilance professionals now need to understand data science basics. IQVIA found that 73% of companies provide 40-60 hours of training in data literacy, model interpretation, and regulatory expectations. One Reddit user in r/pharmacovigilance shared that their team spent months tuning NLP tools to recognize drug names in local slang - like “blue pills” for a specific antidepressant.

Data quality is the biggest silent killer. If your EHRs have missing fields, inconsistent coding, or outdated patient demographics, the AI will learn those errors. That’s why 35-45% of implementation time goes into cleaning data - not building models. And even then, validation documentation for FDA-approved AI tools must exceed 200 pages per algorithm. Commercial vendor tools? Often half that - and with mixed results.

The Future: From Detection to Prevention

The next leap isn’t just faster detection. It’s prediction. Researchers are now training AI to forecast which patients are at highest risk before they even take a drug. By combining genomic data, lifestyle factors, and past medical history, systems can flag someone who’s genetically prone to liver toxicity from a certain medication. That’s not theory - seven academic medical centers are running Phase 2 trials on this right now.

Wearables are adding another layer. Smartwatches now track heart rate variability, sleep patterns, and activity levels. AI can detect subtle changes - like a 12% drop in nighttime heart rate - that might signal an early adverse reaction before the patient even feels sick. One pilot program in Australia captured 8-12% of previously missed adherence issues just by analyzing wearable data.

By 2027, AI is expected to improve causal inference by 60%. That means it won’t just say “this drug might be linked to this side effect.” It will say, “This drug causes this side effect in patients with Gene X and low vitamin D levels.” That’s personalized safety - the end goal.

Who’s Using This Now?

As of Q1 2025, 68% of the top 50 pharmaceutical companies use AI in pharmacovigilance. It’s not optional anymore. The market is growing fast - from $487 million in 2024 to an expected $1.84 billion by 2029. The big players are IQVIA, Lifebit, and the FDA’s own Sentinel System. But smaller firms are catching up. Even regional drugmakers are now leasing AI tools from cloud providers instead of building from scratch.

Regulators are keeping pace. The FDA and EMA both released new guidance in 2025 requiring transparency in AI algorithms. No more hidden models. No more unexplained alerts. Every decision must be auditable. That’s a win for patients - and for trust in the system.

What’s Next?

AI won’t replace pharmacovigilance experts. But experts who use AI will replace those who don’t. That’s the message from FDA Commissioner Robert Califf - and it’s ringing true in labs and boardrooms worldwide. The future of drug safety isn’t about more reports. It’s about smarter detection, earlier intervention, and personalized risk assessment. The technology is here. The question isn’t whether you’ll use it. It’s whether you’re ready to use it well.

How does AI detect drug safety problems faster than humans?

AI scans millions of data points daily - from electronic health records, social media, pharmacy claims, and clinical notes - using natural language processing and machine learning. It finds patterns across unrelated sources that humans would miss. While a human team might review 5-10% of reports, AI analyzes 100%. Signal detection that once took weeks now happens in hours.

Can AI replace pharmacovigilance professionals?

No. AI finds potential safety signals, but humans determine if a drug actually caused the reaction. AI can’t assess context - like a patient’s genetics, lifestyle, or other medications - the way a trained expert can. Regulatory agencies like the EMA and FDA require human oversight in final decisions. The best outcome comes from AI handling volume and speed, and humans handling judgment.

What are the biggest challenges with AI in drug safety?

The biggest challenges are data bias, integration with old systems, and lack of transparency. If training data lacks diversity - say, underrepresenting rural or low-income populations - AI may miss safety signals in those groups. Many companies struggle to connect AI tools with legacy databases, which can take over seven months. And “black box” algorithms that can’t explain their reasoning make regulators and clinicians nervous.

How accurate are AI systems in spotting adverse drug reactions?

Current NLP-based systems achieve 89.7% accuracy in extracting safety signals from unstructured text, according to Lifebit.ai’s 2025 case studies. Reinforcement learning models have improved detection accuracy by over 22% in recent studies. However, accuracy depends heavily on data quality. In clean, well-coded datasets, AI outperforms humans. In messy or biased data, results vary.

Is AI in drug safety regulated?

Yes. The FDA and EMA both issued new guidance in 2025 requiring transparency, reproducibility, and human oversight for AI tools used in pharmacovigilance. The FDA’s Emerging Drug Safety Technology Program now reviews all AI-driven signal detection tools. Validation documentation must be detailed - often over 200 pages - to prove the model works reliably and fairly across populations.

What’s the future of AI in drug safety?

The future is proactive, not reactive. AI will soon predict which patients are at risk before they take a drug, using genomic data, wearable metrics, and lifestyle history. By 2027, AI is expected to improve causal inference by 60%, meaning it will distinguish true drug effects from coincidences much better. Fully automated case processing is still 3-5 years away, but real-time safety monitoring is already here.

Ravi Singhal

ai is cool and all but i seen folks in rural india get prescribed stuff and their only record is a scribbled note on a napkin at the clinic. how’s the machine gonna read that? also why no one talking about how these systems only get trained on data from rich countries? we got a whole continent out here with zero digital footprints but still taking meds

Victoria Arnett

I think people are missing the real issue here AI doesn’t care if you’re poor or old or disabled it just sees patterns and if your data isn’t there you’re invisible not just ignored but erased

adam hector

Let’s be real this isn’t about safety it’s about liability reduction for Big Pharma. AI finds the needle so they can say ‘we detected it early’ while quietly pulling the drug off the market months after the first deaths. The real win here isn’t saving lives it’s protecting their stock price. Wake up people this is PR dressed up as progress

Wendy Tharp

You think this is about safety? Ha. The FDA approved 17 new drugs with AI in six months? That’s not vigilance that’s a death wish. Who’s auditing the auditors? Who made sure the AI wasn’t trained on data from pharma-sponsored trials? I’ve seen the reports. The same labs that test the drugs also build the AI models. This isn’t science it’s a rigged game

Carl Lyday

I work in pharmacovigilance and I’ve seen both sides. AI cut our review time from weeks to hours but the real value is in the noise reduction. We used to drown in 10k reports a month and 90% were duplicates or nonsense. Now AI filters the signal and we focus on what matters. It’s not perfect but it’s the best tool we’ve ever had. The problem isn’t the tech it’s the data gaps and lazy humans who think AI does everything

Dr. Marie White

I appreciate the optimism but I worry about the patients who don’t have smartphones or internet access or even reliable EHRs. If AI is making decisions based on digital footprints… what happens to the elderly in nursing homes who can’t tweet about their dizziness? Or the undocumented worker who sees a clinic once a year? The system is getting smarter but is it getting fairer?

Sharon M Delgado

I just want to say-this is beautiful. Truly. The way AI connects dots across languages, systems, and continents… it’s like a digital nervous system for human health. And the fact that federated learning keeps privacy intact? That’s not just innovation-that’s ethics in motion. I’m so proud to live in a time when technology is finally starting to serve people instead of corporations. 🌍❤️🩺

HALEY BERGSTROM-BORINS

I’ve been following this since 2023. The FDA’s Sentinel system? It’s a front. I’ve seen the internal memos. The AI is designed to flag *low-risk* signals to avoid panic. The real red flags? They get buried under ‘insufficient evidence’ or ‘low prevalence’. This isn’t safety-it’s damage control with a fancy algorithm. And don’t get me started on the 200-page validation docs… they’re just theater. The real code? Still a black box. 🕵️♀️💉